AI as a Learning Partner, Not Just an Automator

I have been using GitHub Copilot, mainly with GPT-5 and VS Code. It works well, but I think Cursor.ai does a better job at integrating chat responses and agentic functionality. Since you can use the same models, the difference lies in the integration with the code editor. What’s interesting is that Cursor.ai was built as a fork of VS Code, which explains the familiarity of its look and functionality.

Cursor AI is a great tool for those who want to try vibe coding—the practice of guiding an AI to build software using natural language—but in my experience, I still prefer VS Code. The rougher integration with AI forces me to review and think about the answers or the code the agent is writing, which gives me more control. Cursor AI is so well integrated that it’s easy to just accept the changes, but this also makes it easy to accept mistakes or code you didn’t want or need.

My immediate goal with these AI tools is the same as before: to use them to enhance my skills, productivity, and the productivity of those around me. Instead of spending time writing unit tests or scripts for maintenance and automation, we can let AI tools write those for us, so we can focus on the bigger goal. Of course, we still have to review the output from these tools, and that is where experience with coding and programming languages in general helps a lot. Having said this, anyone can vibe code, and I invite everyone to try it. Just get familiar with the code editor and start typing your wishes and ideas into the AI prompt. You can build something without knowing any code—that is true. And although you wouldn’t be able to tell when something is unnecessary or wrong, I believe it is still a good exercise. It helps you learn about the AI tool, the code editor, and ultimately the code itself. Both VS Code and Cursor AI offer free versions to get started.

Some experienced programmers say you shouldn’t vibe code if you don’t know much about programming. I disagree. You should use vibe coding because you don’t know much about programming. My opinion on this has changed, I used to think people without experience shouldn’t use AI tools when coding because they wouldn’t be able to tell if the output was good or not. However, I’ve changed my mind. Using AI tools, especially for vibe coding, can be a great learning experience.

That said, do not automatically deploy or publish something to production unless you ensure its security and reliability. A lot of code generated by AI is not production-ready. A simple way to improve results is to include prompts like “provide production-ready suggestions” or “follow security best practices.” Once you think you have something ready, you can ask the AI about reliability, security, and similar concerns. Don't just take what it gives you, use the AI itself to examine what it wrote... you'd be surprised with the results. The good thing is you can do all of this without knowing much about programming, because with the right prompts the AI can guide you through the process. But you have to ask.

Another thing I noticed with large code bases is the limitation of context windows. Each model (LLM) has a context window limit, which matters when working with programs containing thousands of lines of code. When a model (e.g., GPT-5, Claude Sonnet, Gemini) answers, it draws on its training data and, crucially, the data you provide as context. For example, if you reference a code file and ask the AI to improve it, the file, your question, and the AI’s output all count toward the context window. With large applications, you can quickly hit the limit when using the same chat window. The model may then fail to answer or worse, hallucinate. The simple solution is to start over on a new chat window, where you start with a clean slate and plenty of context space, for a while.

Some people suggest working with smaller pieces of code at a time, such as a single file or function. Or starting multiple chat windows as I mentioned above. This works, but the AI will never have enough context to reason about the entire application. As a developer, you do better when you know what the application does overall, when you are familiar with its architecture. So keep this in mind.

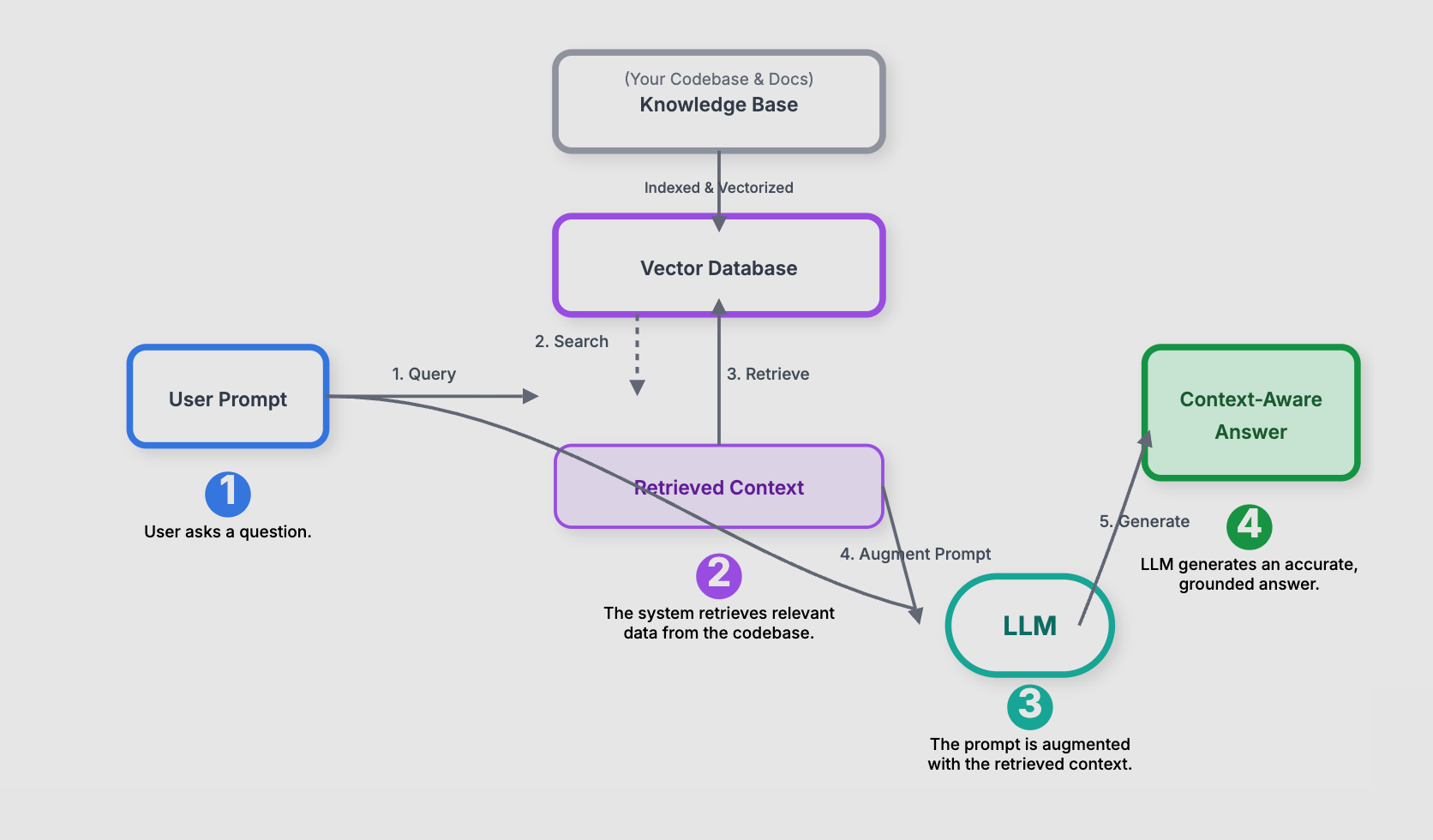

There are workarounds to context window limits, but they require more setup and knowledge. More advanced techniques are emerging to solve this context problem automatically. Retrieval-Augmented Generation (RAG), for example, vectorizes your source files and retrieves what’s needed at runtime. Tools like Copilot and Cursor already parse source code and manage context selection automatically. It isn’t the same as providing the entire codebase as context, but it works for now.

Looking Ahead

These AI tools will continue to improve, and context windows will expand. My goal is to set up local agents that use offline models with a chat interface, drawing only on my own data. For example, I’d like to upload my writings, favorite books, emails, personal information, and photos, and have them organized and parsed so the agent can guide me based on my goals, history, and interests. I wouldn’t share this with an online model I don’t own, but I would with a local one, effectively creating a carbon copy of my memories and ideas.

At work, the use of AI tools will only increase. Most of us are using them for simple tasks, but with the rise of agents and notebooks, organizations will do much more. My goal is to use these tools for things we aren’t doing today. I don’t see them as replacements, but as new tools enabling new possibilities. Of course, they will continue to handle automation and routine writing tasks such as documentation, README files, and training materials. That is low-hanging fruit. The real benefits will come from applications most of us aren’t even considering yet.

What are your thoughts? How are you or your organization using AI tools? Are you vibe coding yet?