Programming: A short history of computer languages

What is computer programming

The action or process of writing computer programs, that is the definition of computer programming. Unfortunately, that doesn’t tell us much.

In a more descriptive way, programming refers to the act of describing processes and procedures by developing a list of instructions and commands for a computer to execute. The type of objects that these instructions manipulate are numbers, words, sounds, images, and the like. The goal of manipulating these objects is to make a computer perform a task and get results which would be nearly impossible for a human being to perform in a timely and accurate manner. The tasks we instruct a computer to make are usually very repetitive and can involve millions of calculations in a short period of time.

Creating a computer program is considered by many a form of art, more like composing music or designing a building. My own opinion is that computer programming is both, it is a mix of art and engineering. I wouldn’t consider a computer program to be functional and well-designed if it didn’t have the needed engineering and some form of art and creativity.

How was the first computer program created?

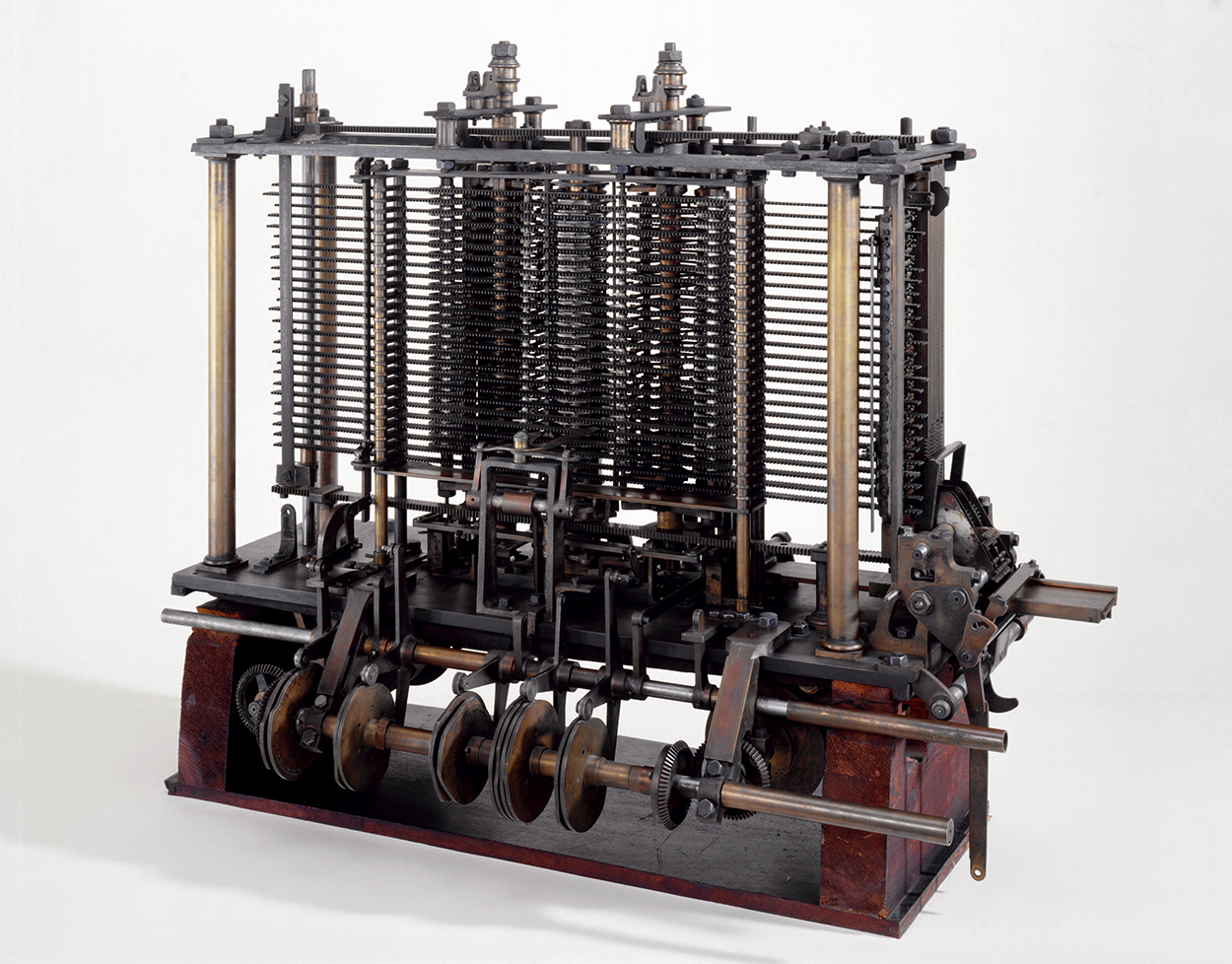

We need to go back to the 19th century when Ada Lovelace (1815 – 1852) wrote a set of notes after translating the memoir of Italian mathematician Luigi Menabrea (1809 – 1896) about the Analytical Engine shown below during a period of nine months between 1842 and 1843.

The Analytical Engine was an invention of English mathematician and computer pioneer Charles Babbage (1791-1871), it was a highly advanced mechanical calculator often considered a forerunner of the electronic calculating computers of the 20th century.

Charles Babbage first conceived the idea of an advanced calculating machine to calculate and print mathematical tables in 1812. This machine, conceived by Babbage in 1834, was designed to evaluate any mathematical formula and to have even higher powers of analysis than his original Difference engine of the 1820s. The only part of the machine was completed before his death in 1871. This is a portion of the mill with a printing mechanism. Babbage was also a reformer, mathematician, philosopher, inventor and political economist.

Ada Lovelace’s set of notes has been recognized by some historians as the world’s first computer program as it contained a groundbreaking description of the possibilities of programming the machine to go beyond number-crunching to “computing”.

Who was the first computer programmer?

Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage’s Analytical Engine as mentioned above. Her notes on the engine include what is recognized as the first algorithm intended to be carried out by a machine. Because of this, she is often regarded as the first computer programmer. She also pointed out what otherwise might have been remembered as the first computer bug in Babbage’s equations, which also makes her possibly the world’s first debugger.

![Alfred Edward Chalon [Public domain], via Wikimedia Commons](https://rcrdo.com/content/images/2015/08/ada_lovelace_chalon_portrait.jpg)

What was the first computer language?

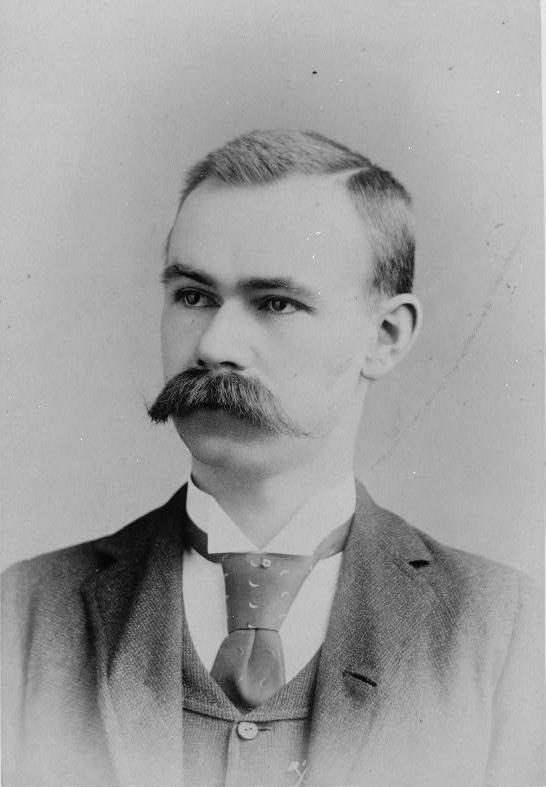

47 years after Ada Lovelace had annotated as what is considered the first computer program, Herman Hollerith (1860 – 1929) created what is considered the first computer language when he realized he could encode information on punch cards. Hollerith was an American statistician and inventor. He was the founder of the Tabulating Machine Company that later merged to become IBM.

His first business application was on the New York Central Railroad. He went on to install systems in utilities, a department store, and other railroads; and these led to the development of more business applications. Hollerith’s company was consolidated into the Computing-Tabulating-Recording Co. in 1911 with Thomas J. Watson, Sr., at the helm, and it was renamed IBM 13 years later.

1936 – In this year, Alonzo Church (1903 – 1995) was able to express the lambda calculus (also written as λ-calculus) in a formulaic way. He defined an encoding of the natural numbers called the Church numerals. A function on the natural numbers is called λ-computable if the corresponding function on the Church numerals can be represented by a term of the λ-calculus.

1943 – 1945 – An early high-level programming language to be designed for a computer was Plankalkül, developed for the German Z3 by Konrad Zuse between 1943 and 1945. However, it was not implemented until 1998 and 2000.

1949 – John Mauchly’s Short Code, proposed in 1949, was one of the first high-level languages ever developed for an electronic computer.[3] Unlike machine code, Short Code statements represented mathematical expressions in an understandable form. However, the program had to be translated into machine code every time it ran, making the process slower than running the equivalent machine code.

At the University of Manchester, Alick Glennie developed Autocode in the early 1950s. A programming language, it used a compiler to automatically convert the language into machine code. The first code and compiler was developed in 1952 for the Mark 1 computer at the University of Manchester and is considered to be the first compiled high-level programming language.

By the 1950s computer engineers realized that assembly language was too complex and a fallible process to build entire systems, and so the first modern programming language was born.

What was the first modern computer language?

FORTRAN (1957) – The name FORTRAN derives from “Formula Translating” system.

John Warner Backus (1924 – 2007), an American computer scientist, assembled a team in 1954 to define and develop Fortran for the IBM 704 computer. In 1957, Backus and his team had created Fortran. The components were very simple and provided the programmer with low-level access to the computer’s innards. Fortran was the first high-level programming language to be put to broad use.

John Warner Backus was also the inventor of the Backus-Naur form (BNF), a widely used notation to define formal language syntax. He also did research in function-level programming and helped to popularize it.

What programming languages were created after Fortran?

LISP (1958) – The name LISP derives from “List Processing”. Linked lists are one of Lisp’s major data structures, and Lisp source code is itself made up of lists.

Lisp was invented by John McCarthy in 1958 while he was at the Massachusetts Institute of Technology (MIT).

COBOL (1959) – The name COBOL derives from “Common Business-Oriented Language”. Cobol is a compiled English-like computer programming language designed for business use.

COBOL was designed in 1959 by the Conference on Data Systems Languages (CODASYL) and was partly based on previous programming language design work by Grace Hopper, commonly referred to as “the (grand)mother of COBOL”

PASCAL (1970) – The name Pascal is in honor of the French mathematician and philosopher Blaise Pascal.

Pascal was developed by Niklaus Wirth. He is a Swiss computer scientist, best known for designing several programming languages, including Pascal, and for pioneering several classic topics in software engineering.

Initially, Pascal was largely, but not exclusively, intended to teach students structured programming. A generation of students used Pascal as an introductory language in undergraduate courses.

C (1972) – The name was based on an earlier language called B which is now almost extinct having been superseded by the C language.

C was originally developed by Dennis Ritchie between 1969 and 1973 at AT&T Bell Labs, and used to re-implement the Unix operating system.

Many later languages have borrowed directly or indirectly from C, including C++, D, Go, Rust, Java, JavaScript, Limbo, LPC, C#, Objective-C, Perl, PHP, Python, Verilog (hardware description language), and Unix’s C shell.

C++ (1983) – C with Classes“.

C++ was created by Bjarne Stroustrup, a Danish computer scientist. The motivation for creating a new language originated from Stroustrup’s experience in programming for his Ph.D.

In 1983, it was renamed from C with Classes to C++. New features were added including virtual functions, function name and operator overloading, references, constants, type-safe free-store memory allocation (new/delete), improved type checking, and BCPL style single-line comments with two forward slashes “//”, etc.

OBJECTIVE C (1983) – Object-Oriented Extension of C.

Objective-C was created primarily by Brad Cox and Tom Love in the early 1980s at their company Stepstone. Both had been introduced to Smalltalk while at ITT Corporation’s Programming Technology Center in 1981. The earliest work on Objective-C traces back to around that time.

Objective-C is a thin layer on top of C, and is a “strict superset” of C, meaning that it is possible to compile any C program with an Objective-C compiler and to freely include C language code within an Objective-C class.

PERL (1987) – It was named Perl because Pearl was already taken.

Though Perl is not officially an acronym, there are various backronyms in use, the most well-known being “Practical Extraction and Reporting Language”.

Perl was originally developed by Larry Wall, a computer programmer and author, most widely known as the creator of the Perl programming language.

Perl was developed as a general-purpose Unix scripting language to make report processing easier.

PHYTON (1991) – The name of the programming language was chosen by the author while being in a slightly irreverent mood (and because he was a big fan of Monty Python’s Flying Circus).

Python is a widely used general-purpose, high-level programming language. Its design philosophy emphasizes code readability, and its syntax allows programmers to express concepts in fewer lines of code than it would be possible in languages such as C++ or Java.

Python was conceived in the late 1980s and its implementation was started in December 1989 by Guido van Rossum at CWI in the Netherlands as a successor to the ABC language capable of exception handling and interfacing with the Amoeba operating system.

Van Rossum is Python’s principal author, and his continuing central role in deciding the direction of Python is reflected in the title given to him by the Python community, benevolent dictator for life (BDFL)

RUBY (1993) – The name “Ruby” originated during an online chat session between Matsumoto and Keiju Ishitsuka. Initially, two names were proposed: “Coral” and “Ruby”. Matsumoto chose the latter because it was the birthstone of one of his colleagues.

Ruby is a dynamic, reflective, object-oriented, general-purpose programming language. According to its authors, Ruby was influenced by Perl, Smalltalk, Eiffel, Ada, and Lisp.

Ruby was designed and developed in the mid-1990s by Yukihiro “Matz” Matsumoto in Japan. Matsumoto has said that Ruby is designed for programmer productivity and fun, following the principles of good user interface design.

JAVA (1995) – It was named Java for the amount of coffee consumed while developing the language.

Java is a general-purpose computer programming language that is concurrent, class-based, object-oriented, and specifically designed to have as few implementation dependencies as possible. It is intended to let application developers “write once, run anywhere” (WORA), meaning that compiled Java code can run on all platforms that support Java without the need for recompilation.

Java was originally developed by James Gosling, a Canadian computer scientist at Sun Microsystems and released in 1995 as a core component of Sun Microsystems’ Java platform. The language derives much of its syntax from C and C++.

PHP (1995) – The name PHP derived originally from Personal Home Page, now it stands for Hypertext PreProcessor.

PHP is a server-side scripting language designed for web development but also used as a general-purpose programming language.

In 1994, Rasmus Lerdorf started the development of PHP when he wrote a series of Common Gateway Interface (CGI) binaries in C, which he used to maintain his personal homepage. He extended them to add the ability to work with web forms and to communicate with databases and called this implementation “Personal Home Page/Forms Interpreter” or PHP/FI.

JAVASCRIPT (1995) – It was developed under the name Mocha, and then the language was officially called LiveScript when it first shipped in beta releases of Netscape Navigator 2.0 in September 1995, but it was renamed JavaScript when it was deployed in the Netscape browser version 2.0B3.

JavaScript is a high level, dynamic, untyped, and interpreted programming language. It has been standardized in the ECMAScript language specification. Alongside HTML and CSS, it is one of the three essential technologies of World Wide Web content production; the majority of websites employ it and it is supported by all modern web browsers without plug-ins.

Despite some naming, syntactic, and standard library similarities, JavaScript and Java are otherwise unrelated and have very different semantics. The syntax of JavaScript is actually derived from C, while the semantics and design are influenced by the Self and Scheme programming languages.

JavaScript was originally developed by Brendan Eich, while he was working for Netscape Communications Corporation. Indeed, while competing with Microsoft for user adoption of web technologies and platforms, Netscape considered their client-server offering a distributed OS with a portable version of Sun Microsystems’ Java providing an environment in which applets could be run.

C# (2000) – The name “C sharp” was inspired by musical notation where a sharp indicates that the written note should be made a semitone higher in pitch.

C# is intended to be a simple, modern, general-purpose, object-oriented programming language. Its development team is led by Anders Hejlsberg. The most recent version is C# 6.0, which was released on July 20, 2015

In January 1999, Anders Hejlsberg formed a team to build a new language at the time called Cool, which stood for “C-like Object Oriented Language”. Microsoft had considered keeping the name “Cool” as the final name of the language but chose not to do so for trademark reasons. By the time the .NET project was publicly announced at the July 2000 Professional Developers Conference, the language had been renamed C#.

SCALA (2003) – The name Scala is a portmanteau of “scalable” and “language”, signifying that it is designed to grow with the demands of its users.

Scala is a programming language for general software applications. Scala has full support for functional programming and a very strong static type system. This allows programs written in Scala to be very concise and thus smaller in size than other general-purpose programming languages.

The design of Scala started in 2001 at the École Polytechnique Fédérale de Lausanne (EPFL) by Martin Odersky, following on from work on Funnel, a programming language combining ideas from functional programming and Petri nets. Odersky had previously worked on Generic Java and javac, Sun’s Java compiler.

GO (2009) – It is also commonly referred to as golang, is a programming language developed at Google.

Go is recognizably in the tradition of C, but makes many changes to improve conciseness, simplicity, and safety.

Go was developed at Google in 2007 by Robert Griesemer, Rob Pike, and Ken Thompson. It is a statically typed language with a syntax loosely derived from that of C, adding garbage collection, type safety, some dynamic-typing capabilities, additional built-in types such as variable-length arrays & key-value maps, and a large standard library.

SWIFT (2014) – It was introduced at Apple’s 2014 Worldwide Developers Conference (WWDC), It underwent an upgrade to version 1.2 during 2014, and a more major upgrade to Swift 2 at WWDC 2015.

Swift is a multi-paradigm, compiled programming language created by Apple Inc. for iOS, OS X, and watch OS development. Swift is designed to work with Apple’s Cocoa and Cocoa Touch frameworks and the large body of existing Objective-C code written for Apple products. Swift is intended to be more resilient to erroneous code (“safer”) than Objective-C, and more concise.

Development of Swift began in 2010 by Chris Lattner, with the eventual collaboration of many other programmers at Apple. Swift took language ideas “from Objective-C, Rust, Haskell, Ruby, Python, C#, CLU, and far too many others to list”. On June 2, 2014, the Worldwide Developers Conference (WWDC) application became the first publicly released app written in Swift.

List of important programming languages by year:

Here is a list of some of the most important programming languages from the 1950s to 2014:

- 1951 – Regional Assembly Language

- 1952 – Autocode

- 1954 – IPL (forerunner to LISP)

- 1955 – FLOW-MATIC (led to COBOL)

- 1957 – FORTRAN (First compiler)

- 1957 – COMTRAN (precursor to COBOL)

- 1958 – LISP

- 1958 – ALGOL 58

- 1959 – FACT (forerunner to COBOL)

- 1959 – COBOL

- 1959 – RPG

- 1962 – APL

- 1962 – Simula

- 1962 – SNOBOL

- 1963 – CPL (forerunner to C)

- 1964 – BASIC

- 1964 – PL/I

- 1966 – JOSS

- 1967 – BCPL (forerunner to C)

- 1968 – Logo

- 1969 – B (forerunner to C)

- 1970 – Pascal

- 1970 – Forth

- 1972 – C

- 1972 – Smalltalk

- 1972 – Prolog

- 1973 – ML

- 1975 – Scheme

- 1978 – SQL (a query language, later extended)

- 1980 – C++ (as C with classes, renamed in 1983)

- 1983 – Ada

- 1984 – Common Lisp

- 1984 – MATLAB

- 1985 – Eiffel

- 1986 – Objective-C

- 1986 – Erlang

- 1987 – Perl

- 1988 – Tcl

- 1988 – Mathematica

- 1989 – FL (Backus)

- 1990 – Haskell

- 1991 – Python

- 1991 – Visual Basic

- 1993 – Ruby

- 1993 – Lua

- 1994 – CLOS (part of ANSI Common Lisp)

- 1995 – Ada 95

- 1995 – Java

- 1995 – Delphi (Object Pascal)

- 1995 – JavaScript

- 1995 – PHP

- 1996 – WebDNA

- 1997 – Rebol

- 1999 – D

- 2000 – ActionScript

- 2000 – C#

- 2001 – Visual Basic .NET

- 2003 – Groovy

- 2003 – Scala

- 2005 – F#

- 2007 – Clojure

- 2009 – Go

- 2011 – Dart

- 2012 – Rust

- 2014 – Swift

This list will be updated as more programming languages are created and implemented.